Insight

January 28, 2026

The Next Phase of AI: Technology, Infrastructure, and Policy in 2025–2026

Executive Summary

- As artificial intelligence (AI) evolves toward advanced, autonomous models, 2025 saw increased experimentation across industries, and a surge in global AI investment and competition.

- With technology moving from experimentation to broad adoption, the transition into 2026 places infrastructure and regulation at the core of the AI agenda; policy priorities include scaling infrastructure, accelerating AI innovation, and expanding AI use.

- This insight looks at the uncertainty around the continued growth of AI – particularly around how advanced models will reshape operations, products, labor, and government services; ultimately, success will depend on how well infrastructure and governance frameworks ensure deployment while minimizing risks.

Introduction

Artificial intelligence (AI) is evolving toward advanced models, including agentic systems capable of autonomous decision-making and action. In 2025, this evolution brought increased experimentation across industries, and a surge in global investment and competition as the world raced to exploit these technologies. Although Congress did not pass major legislation, the Trump Administration advanced executive actions that positioned AI as a strategic national priority for U.S. competitiveness.

In 2026, AI will move from experimentation to broader adoption, as businesses and federal agencies are integrating AI as a strategic tool for their operations. These changes require greater infrastructure capacity – including on AI chips and data centers – and policy frameworks that enable deployment across sectors, putting infrastructure and regulation at the core of the AI agenda. That includes timely development of electricity generation – as the rapidly increasing demand from AI data centers threatens to hinder AI infrastructure development – and policies that remove regulatory barriers to AI innovation and expand its use across the federal government.

This evolution creates new opportunities but also raises risks that require oversight and control as they introduce significant uncertainty – particularly around how advanced models will reshape operations, products, labor and government services – raising new challenges for policymakers. Ultimately, policy priorities will need to focus on expanding AI and energy infrastructure, while also putting policies in place to support deployment and manage risk – particularly around the future of work, liability, and governance for advanced systems.

AI in 2025

In 2025, artificial intelligence moved beyond its early generative phase toward more advanced, autonomous models. The AI boom of 2023 was largely driven by an early type of AI called unimodal generative AI – such as early versions of ChatGPT, which processed text requests to generate text answers – but the technology has since evolved rapidly. 2025 was characterized by a wave of multimodal AI – capable of processing not just text, but also image and audio to generate more robust answers – and agentic AI systems – the ones that can autonomously plan, make predictions, and take action with minimal human intervention. In 2025, 62 percent of organizations reported experiments with agentic workflows across varied industries such as healthcare, finance, retail, and customer service. AI shows the potential to completely restructure these sectors.

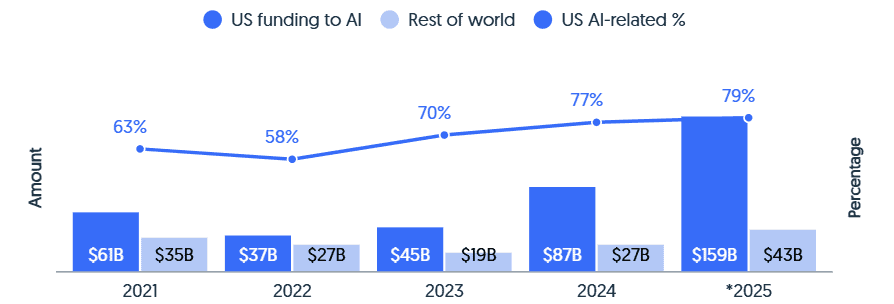

As AI advanced, countries raced to use these technologies, increasing global competition and turning AI into a global priority. This race led to increased global AI investment. As shown in Figure 1, AI funding has risen drastically over time, reaching $202 billion in 2025. The United States dominated AI funding, with $159 billion, or 79 percent, of investment going to U.S.-based companies. Notably, while AI investment went toward computing, infrastructure, and system adoption, persistent infrastructure constraints, such as energy supply, have become a limiting factor on further AI infrastructure development. Also, despite the wave of new investment, the year saw modest enterprise adoption. In agentic efforts, for example, up to 70– 80 percent of initiatives struggled to scale, and only 5 percent of companies obtained meaningful financial returns. This landscape shifted national priorities toward infrastructure and increased concerns about an AI bubble, suggesting the money is going around in circles rather than supporting real use and adoption.

Figure1. U.S. Vs. Rest of World Funding to AI

Source: CrunchBase

As competition, adoption, and infrastructure are shaping the AI global agenda, the Trump Administration led the way though executive actions such as the Removing Barriers to American Leadership in AI, the Ensuring a National Policy Framework, the Genesis Mission, and the AI Action Plan. All shared a common goal of sustaining U.S. leadership in AI by accelerating AI innovation, building infrastructure, and removing barriers, such as red tape regulations that may undermine this purpose. Notably, the recently released Genesis Mission aims to advance development of AI infrastructure and includes AI agents as critical research tools. While Congress did not pass comprehensive legislation last year, federal policymakers and agencies have been working on initiatives to implement these executive actions.

The AI Outlook for 2026

The transition into 2026 puts infrastructure and regulation at the core of the AI agenda. In 2026, AI will move from simply generating text and images to autonomous models that execute and carry out entire tasks on their own. Agentic AI will move from experimentation to actual adoption at the enterprise level, becoming a resource for strategic use cases for businesses. Predictions suggest that agentic AI will represent 10–15 percent of IT spending in 2026, and 33 percent of enterprise software applications will include agentic AI by 2028. Physical AI – which embeds intelligence into the material world – is also gaining popularity, with adoption projected to reach 80 percent within two years, especially in manufacturing and defense.

This AI evolution creates new opportunities, but also real challenges that require prompt regulatory action. Notably, infrastructure constraints are a key priority. As previous American Action Forum analysis explains, forecasts suggest that global electricity demand from data centers will increase 50 percent by 2027 and by as much as 165 percent by 2030 compared to the level in 2023. Without sufficient electricity supply, domestic data center deployment could be lower than desired to sustain AI initiatives, potentially pushing data center developers to locate new projects in other countries.

This has made the timely development of electric generation a key priority for Congress and federal agencies. Congress’ attention will be on evaluating the federal permitting landscape for data centers and their supporting energy infrastructure. Bills on their agenda include the SPEED Act, ePermit Act, and PERMIT Act, that aim to streamline and reform federal permitting processes to accelerate infrastructure and energy development. Some agencies are promoting infrastructure development. One example is the U.S. Department of Energy which issued a Request for Offers inviting U.S. companies to build and power AI data centers on its Paducah, Kentucky site. This action followed executive orders to leverage federal land assets to quickly deploy data centers and energy generation projects.

While infrastructure lays the foundation for AI development and adoption, infrastructure alone is not enough, and policymakers will also be focusing on removing regulatory barriers for AI. In 2026, federal preemption will be at a crossroads, as state initiatives intensify. More than a hundred state AI laws were enacted last year, increasing concerns about a patchwork of regulations that might undermine AI development and deployment. The federal government will consider whether preemption is needed and how to appropriately regulate AI. A federal preemption, however, will have to be linked to a clear national standard to gain traction, as previous proposals have fallen short of enactment due to a lack of a federal standard that replaces state action. Additionally, proposals such as the SANDBOX Act – which would establish a regulatory “sandbox” to allow AI developers the flexibility to test and deploy new technologies without being constrained by outdated regulations – would likely be prioritized to reduce barriers to AI innovation.

Finally, as sectors race to adopt AI, expect federal action to promote its adoption across the federal government. Currently nearly 90 percent of federal agencies are already using or intending to use AI, a trend that will continue in 2026. Notably, the State Department released its Enterprise Data and Artificial Intelligence Strategy for 2026. This plan aims to equip diplomats with AI tools for real-time decision making and enhance AI data infrastructure across federal agencies. The policy choices made today will be critical in shaping how AI develops across the economy and how it ultimately transforms government services.

Unveiling Uncertainty

While this landscape creates new opportunities, it also brings uncertainty and raises risks that prompt calls for strong oversight and control. Widespread adoption of AI agents and other autonomous systems will likely reshape the division of labor between people and virtual workers, as agents will act as digital coworkers, leading to changes in the workplace that are still unclear. Also, as AI systems begin to execute code, sign contracts, and book transactions independently, the question of who is liable for “autonomous errors” remains largely unresolved. While these models would be able to make autonomous decisions across organizations and have a critical role in sectors such as scientific research – as the Genesis Mission seeks to achieve – they are still in a developmental stage and face evaluation challenges that require further governance, liability, and monitoring frameworks. In response, policy priorities will need to focus on expanding AI and energy infrastructure, while also putting in place policies to support deployment and manage risk – particularly around the future of work, liability, and governance for advanced systems.